Lab 4 Distributions II

Matthew J. C. Crump

9/17/2020

Lab4_Distributions_II.RmdOverview

In lecture you are learning about basic probability. In this lab we will continue to learn about working with distributions in R, and we will examine issues relating to basic probability.

We will look at three conceptual issues with probability, and gain practical skills in R for organizing and managing simulation data that will be useful for the rest of this course. In all of the examples we will generate events from particular distributions and examine them.

- Concept I: Probabilistic event generation

- Concept II: Experiencing probability

- Concept III: Subjective Probability

Concept I: Probabilisitic event generation

In this concept section we will use R to generate events with specified probabilities. We will mainly use the sample() function, which we are already familiar with. This concept section explores probabilistic event generation in a few different ways by examining a few probability problems:

Dice Problems

- Use R to roll a fair six-sided die, and show that each number comes up equally frequently in the long run. Roll the die 10,000 times, report how many times each number (1-6) was rolled.

Each number should come up about 10000/6 = 1666.667 times.

rolls <- sample(1:6,10000, replace=TRUE)

table(rolls)

#> rolls

#> 1 2 3 4 5 6

#> 1645 1663 1625 1619 1726 1722- With a pair of six-sided dice it is possible to roll the numbers 2 to 12. Use a simulation of 10000 rolls (of a pair of dice) in R to calculate the probability of rolling each of the possible numbers.

one <- sample(1:6,10000, replace=TRUE)

two <- sample(1:6,10000, replace=TRUE)

combined <- one+two

table(combined)/10000

#> combined

#> 2 3 4 5 6 7 8 9 10 11 12

#> 0.0283 0.0571 0.0817 0.1129 0.1376 0.1661 0.1367 0.1139 0.0834 0.0553 0.0270Let’s compare the result of the simulation to the known probabilities. First, we need to determine the number of ways that each number can be obtained by rolling a pair of dice. We can use R to do this as well:

first <- rep(x= 1:6, each = 6)

second <- rep(x= 1:6, times = 6)

sum_rolls <- first+second

table(sum_rolls)/length(sum_rolls)

#> sum_rolls

#> 2 3 4 5 6 7 8

#> 0.02777778 0.05555556 0.08333333 0.11111111 0.13888889 0.16666667 0.13888889

#> 9 10 11 12

#> 0.11111111 0.08333333 0.05555556 0.02777778

## compare

sim_result <- table(combined)/10000

true_probs <- table(sum_rolls)/length(sum_rolls)

## Difference

true_probs-sim_result

#> sum_rolls

#> 2 3 4 5 6

#> -5.222222e-04 -1.544444e-03 1.633333e-03 -1.788889e-03 1.288889e-03

#> 7 8 9 10 11

#> 5.666667e-04 2.188889e-03 -2.788889e-03 -6.666667e-05 2.555556e-04

#> 12

#> 7.777778e-04Event generators

Remember that you can use sample() to generate events with specific probabilities:

- Generate P(“A”) = .8, and P(“B”) =.2, run the generator 20 times.

sample(c("A","B"), 20, replace = TRUE, prob = c(.8, .2))

#> [1] "A" "A" "A" "A" "A" "A" "A" "B" "A" "B" "A" "A" "A" "A" "B" "A" "B" "A" "A"

#> [20] "B"- Generate letters from the alphabet such that each letter could occur with equal probability. Generate 50 letters:

Note, conveniently, R contains a variable called letters, that is a vector of lowercase letters (and an uppercase one called LETTERS)

letters

#> [1] "a" "b" "c" "d" "e" "f" "g" "h" "i" "j" "k" "l" "m" "n" "o" "p" "q" "r" "s"

#> [20] "t" "u" "v" "w" "x" "y" "z"

LETTERS

#> [1] "A" "B" "C" "D" "E" "F" "G" "H" "I" "J" "K" "L" "M" "N" "O" "P" "Q" "R" "S"

#> [20] "T" "U" "V" "W" "X" "Y" "Z"

sample(letters,50,replace=TRUE)

#> [1] "t" "l" "m" "x" "l" "t" "q" "q" "i" "d" "g" "n" "m" "a" "k" "z" "v" "z" "u"

#> [20] "d" "i" "t" "q" "g" "h" "b" "k" "a" "v" "l" "n" "b" "v" "o" "m" "x" "k" "x"

#> [39] "s" "n" "q" "p" "v" "o" "h" "g" "y" "y" "m" "i"- Create a random string generator that creates strings of random letters. For example a string of 5 random letters could look like “fjwud”. Generate 50 random letter strings, each with 5 random letters in it.

my_letters <- sample(letters,50*5,replace=TRUE)

# turn the vector into a matrix with 5 columns

my_strings <- matrix(my_letters, ncol=5)

# each row is a word, need to collapse the column to create a string

paste(my_strings[1,], collapse="")

#> [1] "wicbw"

# loop to collapse all of the rows into words

random_strings <-c()

for(i in 1:dim(my_strings)[1]){

random_strings[i] <- paste(my_strings[i,], collapse="")

}

random_strings

#> [1] "wicbw" "rlemn" "ambqn" "hknhu" "qyqpe" "ozldm" "xtsen" "ytazf" "tuhsy"

#> [10] "ftarr" "wkbau" "mdunu" "hoizu" "bpadg" "rmqsd" "ellwg" "nmzoe" "jgxis"

#> [19] "glfxj" "kcmbr" "axrdb" "ovadh" "nyjxs" "pkivx" "cxtln" "xgsks" "ryxpz"

#> [28] "scpxv" "ovepq" "vurwy" "ccrcp" "iynqe" "pfiqo" "scmfi" "yinme" "hvpmv"

#> [37] "gkcdn" "rfawq" "tlatl" "tkoqo" "xdrgr" "kgzbc" "uernh" "pfhos" "sothf"

#> [46] "bggai" "vprbr" "kcdnr" "kyaof" "bvjqt"Concept II: Experiencing probability

People talk about probabilities all of the time. For example, tomorrow might have a 10% chance of rain, and a fair coin has a 50% chance of landing heads or tails, are both common examples. We have already begun to look at how probabilities behave in lab 3, when we used R to flip a coin to demonstrate that a coin is fair in the long run. We expand on that demonstration here.

One takeaway point from the coin flipping example is that P(heads) = .5 (probability of getting a heads equals 50%), is only true “in the long run”. In the short run, you could get a bunch of tails.

Short-run coin flipping

- Consider flipping a fair coin ten times. What are the possible outcomes? And what is the probability of each of the outcomes? Let’s answer this question with a simulation in R.

Some of the possible outcomes are 10 Tails or 10 Heads, or any combination of heads and tails in between, which can be described as 0 heads to 10 heads.

sim_results <- replicate(10000,

sample( c(1,0), 10, replace=TRUE)

)

number_of_heads <- colSums(sim_results)

table(number_of_heads)/10000

#> number_of_heads

#> 0 1 2 3 4 5 6 7 8 9 10

#> 0.0015 0.0100 0.0477 0.1189 0.2015 0.2501 0.2070 0.1130 0.0398 0.0091 0.0014

# alternative solution using rbinom

number_of_heads <- rbinom(10000,10,prob=.5)

table(number_of_heads)/10000

#> number_of_heads

#> 0 1 2 3 4 5 6 7 8 9 10

#> 0.0009 0.0082 0.0442 0.1182 0.2062 0.2467 0.1988 0.1194 0.0473 0.0089 0.0012- If you flipped a coin 10000 times, you would find many different kinds of short-run sequences. For example, HH, HT, TH, and TT. What is the probability of each of these kinds of sequences?

flips <- sample(c("H","T"), 10000, replace=TRUE)

sequence <- c()

for(i in 2:length(flips)){

first_element <- flips[i-1]

second_element <- flips[i]

sequence[i-1] <- paste0(first_element,second_element)

}

table(sequence)/sum(table(sequence))

#> sequence

#> HH HT TH TT

#> 0.2598260 0.2456246 0.2456246 0.2489249

## 3 element sequences

flips <- sample(c("H","T"), 1000000, replace=TRUE)

sequence <- c()

for(i in 3:length(flips)){

first_element <- flips[i-2]

second_element <- flips[i-1]

third_element <- flips[i]

sequence[i-1] <- paste0(first_element,

second_element,

third_element)

}

table(sequence)/sum(table(sequence))

#> sequence

#> HHH HHT HTH HTT THH THT TTH TTT

#> 0.1258533 0.1251143 0.1245642 0.1250733 0.1251143 0.1245232 0.1250723 0.1246852Concept III: Subjective Probability

Vokey & Allen discuss the Bayesian concept of subjective probability, which is the practice of assigning probabilities to beliefs, and updating probabilities about the belief through a data-gathering process.

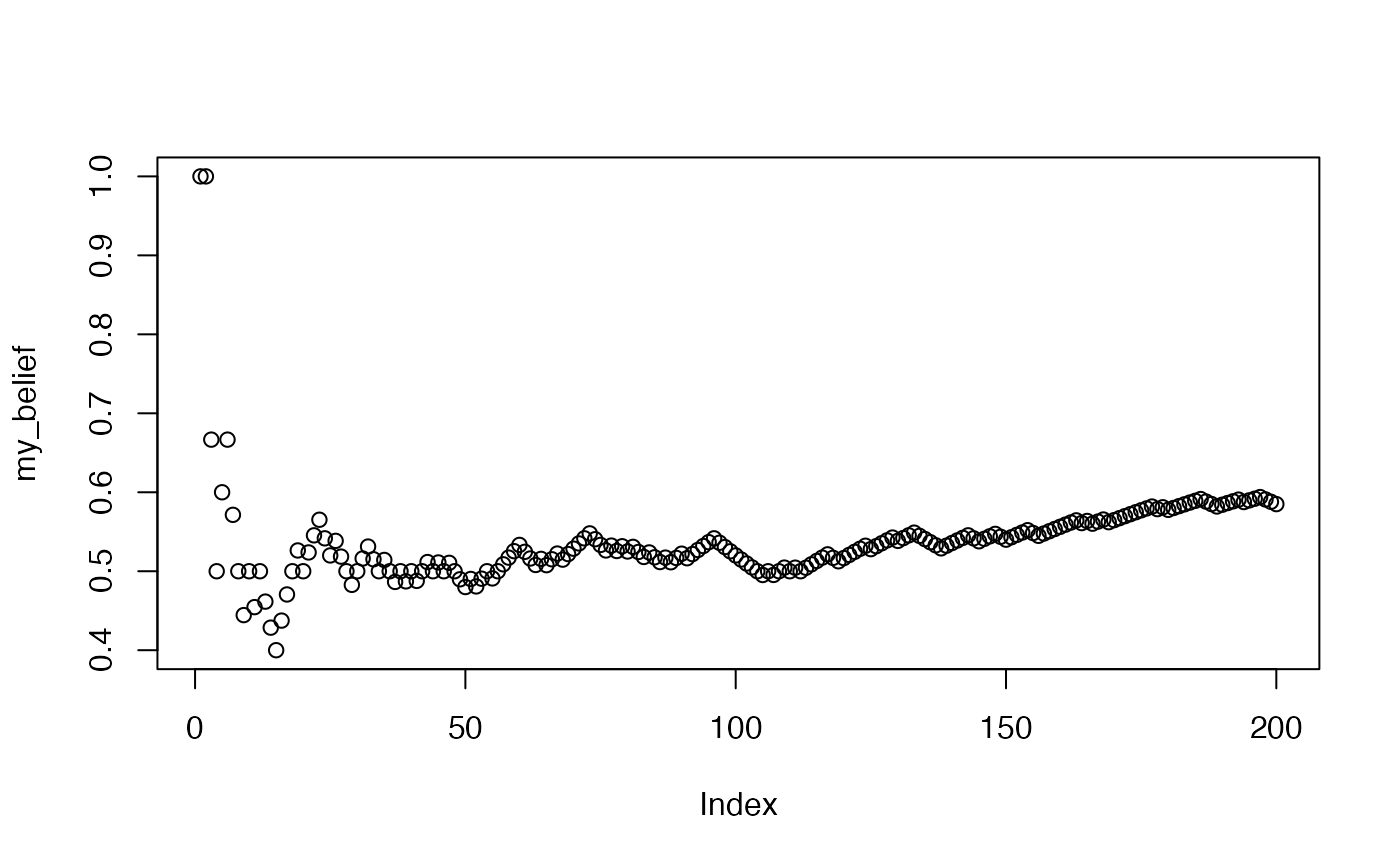

In this concept section we use R to demonstrate a basic example of belief updating.

First, we create a sequence of events. We will stick with coin flips. Let’s create a situation where event probability changes at some point, and our task will be to collect data and determine if we can update our beliefs about the world.

So, we will flip a fair coin 100 times, and then flip a biased coin 100 times. The biased coin will be more likely to come up heads (60%).

Next, imagine that you have no idea what kind of coins were being flipped, and all you have is the sequence of flips. You start at the first coin flip, and go through all of them, each time you will use the data to update your belief about the coin.

my_knowledge <- c()

my_belief <- c()

for(i in 1:length(simulated_sequence)){

my_knowledge[i] <- simulated_sequence[i]

my_belief[i] <- sum(my_knowledge)/length(my_knowledge)

}

plot(my_belief)

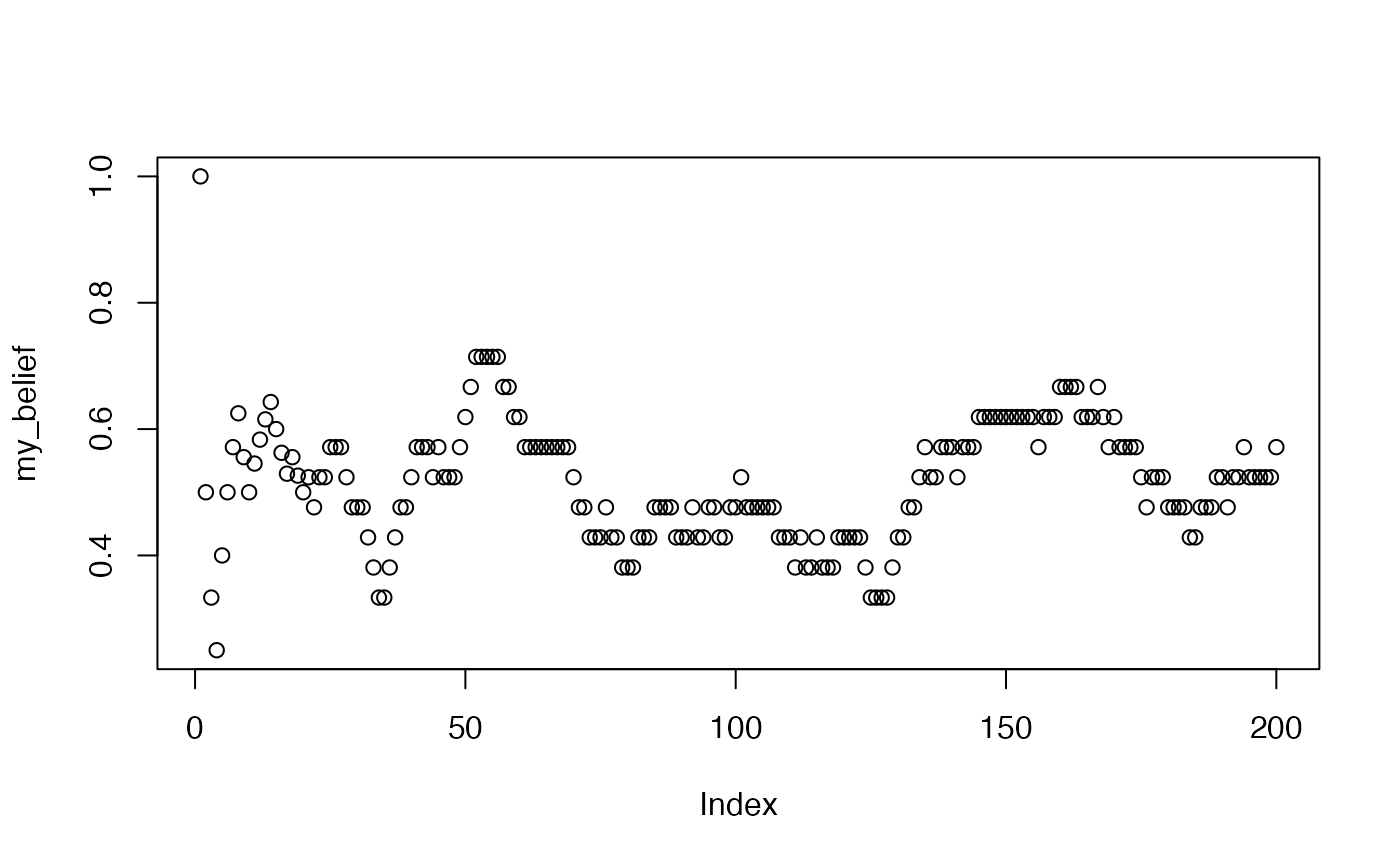

What do your beliefs about the probability of getting heads look like if you only allow yourself to remember the last 20 coin flips?

When would you be confident you had the correct belief about the probability of getting a heads?

simulated_sequence <- c(rbinom(100,1,.5),

rbinom(100,1,.6))

my_knowledge <- c()

my_belief <- c()

for(i in 1:length(simulated_sequence)){

my_knowledge[i] <- simulated_sequence[i]

if(i <= 20){

my_belief[i] <- sum(my_knowledge)/length(my_knowledge)

}else{

my_belief[i] <- sum(my_knowledge[i:(i-20)])/length(my_knowledge[i:(i-20)])

}

}

plot(my_belief)

Lab 4 Generalization Assignment

Instructions

In general, labs will present a discussion of problems and issues with example code like above, and then students will be tasked with completing generalization assignments, showing that they can work with the concepts and tools independently.

Your assignment instructions are the following:

- Work inside the R project “StatsLab1” you have been using

- Create a new R Markdown document called “Lab4.Rmd”

- Use Lab4.Rmd to show your work attempting to solve the following generalization problems. Commit your work regularly so that it appears on your Github repository.

- For each problem, make a note about how much of the problem you believe you can solve independently without help. For example, if you needed to watch the help video and are unable to solve the problem on your own without copying the answers, then your note would be 0. If you are confident you can complete the problem from scratch completely on your own, your note would be 100. It is OK to have all 0s or 100s anything in between.

- Submit your github repository link for Lab 4 on blackboard.

- There are five problems to solve

Problems

- Estimate the letter occurrence probabilities of all 26 letters in English by measuring a paragraph of English text from wikipedia. (hint use

strsplit()to split a paragraph into individual letters) (1 point).

my_paragraph <- "This is a paragraph, with some stuff in it. This is another sentence in the paragraph"

the_letters <- unlist(strsplit(my_paragraph, split=""))

the_letters

#> [1] "T" "h" "i" "s" " " "i" "s" " " "a" " " "p" "a" "r" "a" "g" "r" "a" "p" "h"

#> [20] "," " " "w" "i" "t" "h" " " "s" "o" "m" "e" " " "s" "t" "u" "f" "f" " " "i"

#> [39] "n" " " "i" "t" "." " " "T" "h" "i" "s" " " "i" "s" " " "a" "n" "o" "t" "h"

#> [58] "e" "r" " " "s" "e" "n" "t" "e" "n" "c" "e" " " "i" "n" " " "t" "h" "e" " "

#> [77] "p" "a" "r" "a" "g" "r" "a" "p" "h"Generate “random” strings of letters that are sampled from a distribution where letter occurrence probability is the same as natural English. Use the probabilities for each letter from this wikipedia article, or use your own estimates from the previous question (2 points).

Generate a random walk of 10,000 steps. In a random walk, you are simulating the process of randomly taking a step up or down, as if you are on an infinite staircase. At each step you flip a coin. If you get heads you go up one step, if you get tails you go down one step. Start on step 0, then simulate a random walk for 10,000 steps. Your vector should preserve the step number for each step. For example, if the the first three steps were all heads, then the vector would begin with 0,1,2,3, which indicates a single step up each time. Plot the first 1,000 steps. (1 point)

What was the most positive and most negative step reached out of 10,000? (1 point)

What was the longest run of steps where all steps were positive numbers. For example, in the sequence: 1,2,3,2,1,0,-1,-2,-1,-2,-1,0,1,2,3; the answer is 5 because the first five values were all positive, and this was the longest sequence of positive values. (1 point).