Lab 5 Sampling Distributions

Matthew J. C. Crump

10/2/2020

Lab5_Sampling_Distributions.RmdReview

In the last two labs we have begun to explore distributions, and have used R to create and sample from distributions. We will continue this exploration in many of the following labs.

As we continue I want to point out a major conceptual goal that I have for you. This is to have a nuanced understanding of one statement and one question:

- Chance can do things

- What can chance do?

To put this into concrete terms from previous labs. You know that ‘chance’ can produce different outcomes when you flip a coin. This is a sense in which chance do things. A 50% chance process can sometimes make a heads, and sometimes a tails. Also, we have started to ask “what can chance do?” in prior labs. For example, we asked how often chance produces 10 heads in a row, when you a flip a coin. We found that chance doesn’t do that very often, compared to say 5 heads and 5 tails.

We are using these next labs to find different ways to use R to experience 1) that chance can do things, and 2) how likely it is that some things happen by chance. We are working toward a third question (for next lab), which is 3) did chance do it?…or when I run an experiment, is it possible that chance alone produced the data that was collected?

Overview

This lab has the following modules:

- Conceptual Review I: Probability Distributions

- we review sampling from probability distributions using R and examine a few additional aspects of base R distribution functions

- Conceptual II: Sampling Distributions

- we use R to create a new kind of distribution, called a sampling distribution. This will prepare you for future statistics lectures and concepts that all fundamentally depend on sampling distributions.

- Understanding sampling distributions may well be the most fundamental thing to understand about statistics (but that’s just my opinion).

Probability Distributions

In previous labs we learned that it is possible to sample numbers from particular distributions in R.

#see all the distribution functions

?distributionsNormal Distribution

We use rnorm() to sample numbers from a normal distribution:

rnorm(n=10, mean = 0, sd = 1)

#> [1] 0.8935104 -1.4215508 -1.2075878 -0.7395785 -0.6251588 -0.2784598

#> [7] -0.1060565 -0.7599442 0.5009848 -2.0783704We can ‘see’ the distribution by sampling a large number of observations, and plotting them in a histogram:

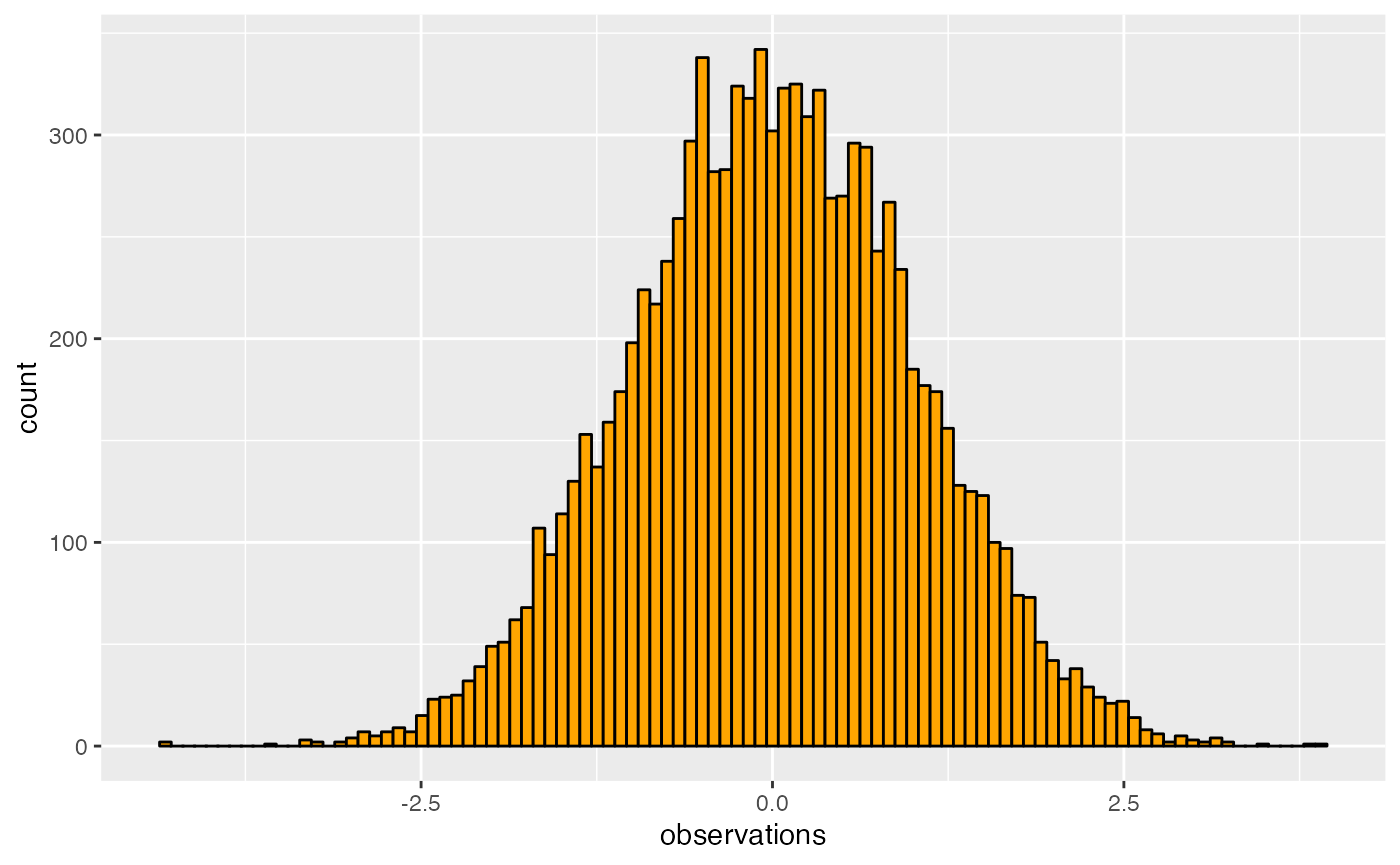

library(ggplot2)

some_data <- data.frame(observations = rnorm(n=10000, mean = 0, sd = 1),

type = "A")

ggplot(some_data, aes(x=observations)) +

geom_histogram(bins=100, color="black",

fill= 'orange')

We can see in this example that using random chance to sample from this distribution caused these numbers to be observed. So, we can see that “chance did something”. We can also see that chance did some things more than others. Values close to 0 were sampled much more often than values larger 2.5.

How often did we sample a value larger than 2.5? What is the probability that chance would produce a value larger than 2.5? What about any other value for this distribution? To answer these questions, we need to get more specific about exactly what chance in this situation, for this distribution, is capable of doing.

- We can answer a question like the above through observation. We can look at the sample that we generated, and see how many numbers out of the total are larger than a particular value:

some_data$observations[some_data$observations > 1.64]

#> [1] 1.760586 1.791571 2.098474 2.529518 1.784727 2.201683 1.733999 2.260419

#> [9] 1.657527 2.467491 2.276430 1.851917 1.873181 1.943389 1.946778 2.307982

#> [17] 2.342829 1.799318 2.027029 1.861938 1.682137 1.899182 1.888689 1.698997

#> [25] 2.341960 1.674597 2.718092 1.963650 1.764546 1.796935 2.054515 1.895980

#> [33] 1.686357 1.975606 1.845671 1.806528 1.749081 2.233970 1.808801 1.752264

#> [41] 2.946963 1.932932 2.234529 1.818683 1.658413 1.659447 1.671009 1.949254

#> [49] 2.189468 1.966200 1.867912 1.789596 2.344188 1.703131 1.703046 1.667919

#> [57] 2.445243 1.698473 2.089467 1.668115 1.989518 1.716363 2.301081 1.833916

#> [65] 1.992669 2.438992 1.838419 1.726056 2.051362 2.185332 1.724040 1.958634

#> [73] 1.656040 1.961088 1.684598 1.788965 1.758057 1.794395 3.166886 1.884531

#> [81] 1.774611 2.043917 2.678680 1.796512 1.811135 1.735151 1.681603 2.009908

#> [89] 1.797347 1.770643 2.058168 1.783688 3.127104 2.091459 1.673301 2.276191

#> [97] 1.654108 2.250417 2.179499 1.813661 1.682503 1.827319 2.103998 1.699982

#> [105] 2.189507 1.924981 1.862130 1.965161 2.452109 1.728233 2.751099 1.833514

#> [113] 1.950022 2.015896 1.681530 2.474557 1.811117 2.369065 2.638827 1.963749

#> [121] 1.832892 1.841777 1.995814 1.648767 1.920104 1.729433 1.652951 1.758807

#> [129] 1.969002 1.863032 2.299555 2.692802 2.189451 1.765737 1.773206 2.036746

#> [137] 2.027971 1.815032 1.885643 2.473710 2.400303 2.222611 1.736516 1.720432

#> [145] 1.724793 1.819062 2.196989 2.462644 2.375139 1.919844 2.222432 2.244338

#> [153] 1.937304 1.676788 2.354483 1.982931 1.774438 1.640061 1.826567 1.927683

#> [161] 1.669911 1.927039 1.757221 1.678199 1.947413 2.957907 3.045287 2.043407

#> [169] 1.870146 1.788740 2.691582 2.592124 1.923475 1.680038 1.729905 2.170492

#> [177] 2.052937 3.175312 2.151703 1.996425 3.257148 2.070750 1.989562 1.880196

#> [185] 2.408870 2.375300 1.785669 1.900169 1.855787 1.798892 1.775990 2.416538

#> [193] 2.178420 1.647637 2.035616 1.891147 1.744516 1.913240 2.315355 1.825372

#> [201] 1.887125 2.304336 1.732368 1.911318 1.668008 1.751385 1.654036 1.790363

#> [209] 2.923655 2.551856 2.765893 2.428585 2.540872 2.157477 1.937589 1.876756

#> [217] 1.758715 1.996928 2.513148 2.576234 2.456620 1.790582 2.217739 1.709535

#> [225] 1.662503 1.996611 2.513713 1.872950 1.883224 2.107707 2.175087 1.971081

#> [233] 2.046494 1.878899 2.585927 3.864441 2.187083 2.423800 2.100143 1.794947

#> [241] 2.319011 2.451721 1.686019 2.117405 1.833920 2.561782 1.651974 2.318016

#> [249] 2.414755 1.821578 2.004267 2.323310 2.467306 2.022957 1.662159 1.709361

#> [257] 1.800023 1.705557 2.380492 1.946467 2.631018 1.685283 1.793060 1.814843

#> [265] 1.658792 1.710380 1.960013 1.738682 2.458532 1.643611 2.538339 2.034673

#> [273] 2.565900 1.857944 2.144765 2.285193 2.034542 2.207439 2.341673 1.776476

#> [281] 2.081860 2.039284 1.790739 1.849034 1.992914 2.137316 2.410385 1.700420

#> [289] 1.694905 1.674388 1.898309 2.679764 2.360422 2.184947 2.052852 1.770949

#> [297] 2.889130 1.687896 2.066637 2.513564 2.301588 1.853401 1.828857 2.058102

#> [305] 2.299612 3.199356 1.674969 1.874309 2.658389 1.714218 2.213517 1.780133

#> [313] 2.516233 2.106674 2.196886 2.332389 2.440045 1.717591 1.805848 1.732689

#> [321] 1.674068 1.754441 2.502269 2.896941 1.768648 1.722134 1.868387 1.654989

#> [329] 1.909016 2.757048 1.851591 2.011433 2.164829 2.514830 1.695469 2.803082

#> [337] 1.853242 1.659606 1.866124 1.659360 1.854157 2.273972 1.857783 1.726854

#> [345] 1.697237 2.505317 1.965173 2.025586 2.316145 1.656434 3.191895 2.250939

#> [353] 1.658912 2.263900 1.665657 1.932778 1.815181 1.664144 1.669367 1.950910

#> [361] 2.083779 2.393134 2.195831 1.658198 2.019144 2.135762 1.878846 3.073525

#> [369] 2.089023 2.143259 1.701462 1.753103 3.800149 1.874206 1.781077 2.025732

#> [377] 1.783709 2.051581 2.606275 1.836765 2.211991 2.119738 1.751674 2.090851

#> [385] 1.879851 2.137058 2.194492 2.568715 2.134486 1.819064 1.641977 1.705737

#> [393] 1.803200 2.130584 2.622991 2.373651 1.717643 1.918177 1.854487 2.376132

#> [401] 1.738609 2.138579 1.903723 1.713280 2.098336 2.222599 2.143300 1.796242

#> [409] 1.689906 2.240249 2.727631 1.740675 1.679002 2.169651 2.292820 2.233318

#> [417] 1.745601 2.289266 1.889925 2.032452 2.267104 1.657769 2.003545 2.120209

#> [425] 1.794383 2.182557 1.865719 1.740227 1.678380 2.127678 3.012441 1.651534

#> [433] 1.711374 1.989383 1.740099 2.372682 1.699702 1.843346 2.232129 1.795315

#> [441] 1.720266 2.464699 2.151461 2.214182 2.019528 1.680420 1.782323 2.069879

#> [449] 2.022392 2.037554 2.954573 1.787889 2.575564 1.808978 1.744343 2.231983

#> [457] 1.714629 1.996642 2.476506 2.254615 1.916857 2.802243 1.746662 2.393792

#> [465] 1.819664 1.966424 2.504917 2.074914 1.811380 2.120601 2.255442 1.779560

#> [473] 2.123039 1.844472 1.836954 1.693135 1.808856 2.131138 2.293858 1.748970

#> [481] 1.697530 1.886307 1.659390 2.327635 2.214235 1.940034 1.940073 1.686233

#> [489] 2.108633 1.902367 2.482390 1.921889 1.805667 1.743661 1.654877 1.925929

#> [497] 2.891174 1.890818 1.763052 2.554143 2.185373 1.775548 1.667703 2.184591

#> [505] 1.738304 2.049092 1.696810 2.616159 1.794502 1.682229 2.011384 2.312483

#> [513] 2.164304 1.782326 2.776430 1.647719 3.478445 2.432400 2.434496 1.672186

#> [521] 2.204222 2.503900 1.764075 2.549219 2.026326 1.668055 2.213328 2.025394

#> [529] 1.827747

length(some_data$observations[some_data$observations > 1.64])

#> [1] 529

length(some_data$observations[some_data$observations > 1.64])/10000

#> [1] 0.0529- We could also compute the probability directly using analytical formulas. And, these formulas also exist in R. Specifically, distribution formulas begin with

d,p,q, andr, so there arednorm,pnorm,qnorm, andrnormfunctions for the normal distribution (and other distributions).

rnorm()

rnorm(n, mean = 0, sd = 1) samples observations (or random deviates) from a normal distribution with specified mean and standard deviation.

rnorm(n=10, mean = 0, sd = 1)

#> [1] 1.58527090 0.40046954 -0.72756851 0.77343680 -0.07487444 -0.93723841

#> [7] 0.02954117 -0.49584267 0.54400747 0.18398787dnorm()

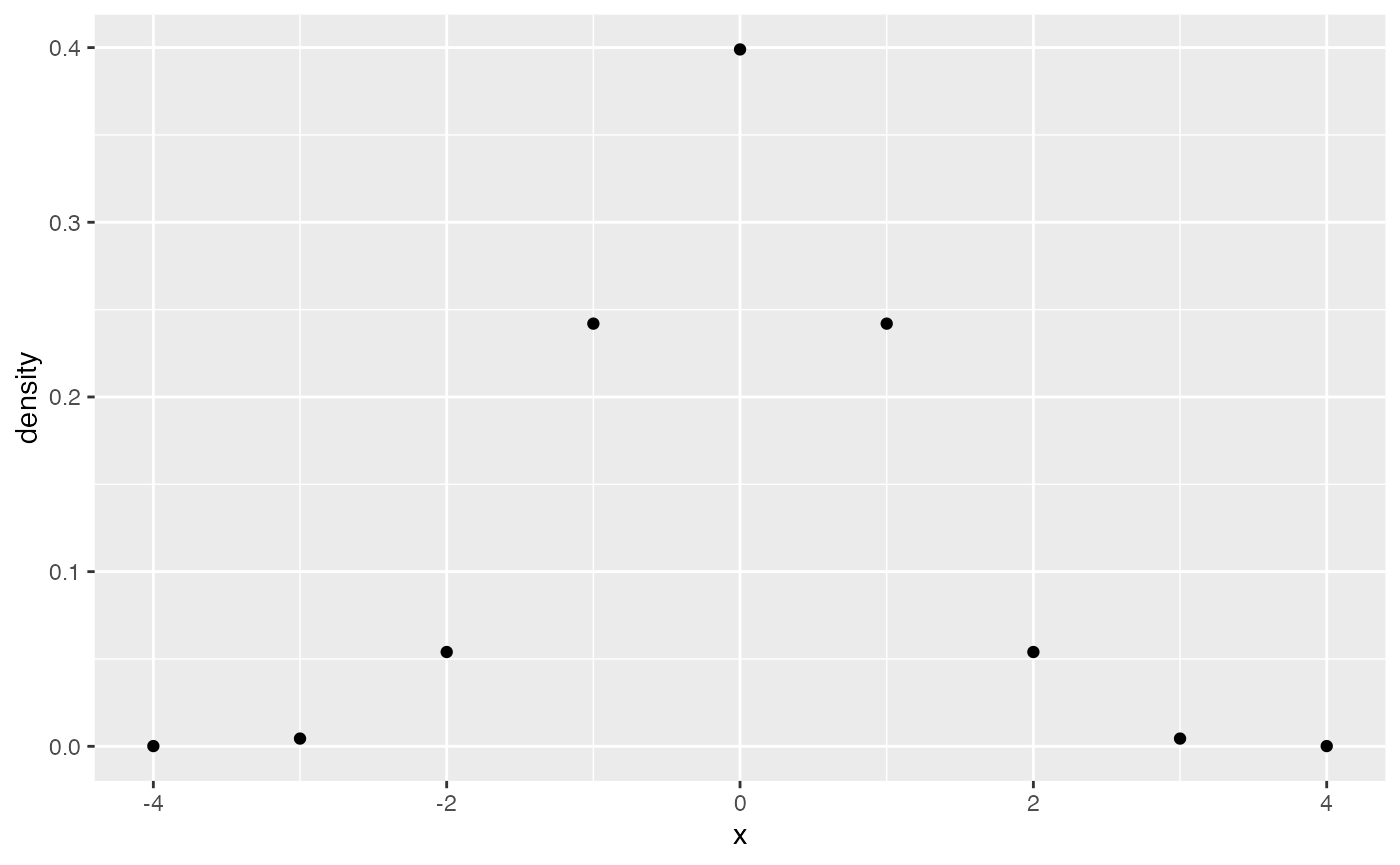

dnorm(x, mean = 0, sd = 1, log = FALSE) is the probability density function. It returns the probability density of the distribution for any value that can be obtained from the distribution.

For example, in the above histogram, we can see that the distribution produces values roughly between -3 and 3, or perhaps -4 to 4. We also see that as values approach 0, they happen more often. So, the probability density changes across the distribution. We can plot this directly using dorm(), by supplying a sequence of value, say from -4 to 4.

library(ggplot2)

some_data <- data.frame(density = dnorm(-4:4, mean = 0, sd = 1),

x = -4:4)

knitr::kable(some_data)| density | x |

|---|---|

| 0.0001338 | -4 |

| 0.0044318 | -3 |

| 0.0539910 | -2 |

| 0.2419707 | -1 |

| 0.3989423 | 0 |

| 0.2419707 | 1 |

| 0.0539910 | 2 |

| 0.0044318 | 3 |

| 0.0001338 | 4 |

ggplot(some_data, aes(x=x, y=density)) +

geom_point() To generate a plot of the full distribution in R, you could calculate additional intervening values on the x-axis, and use a line plot.

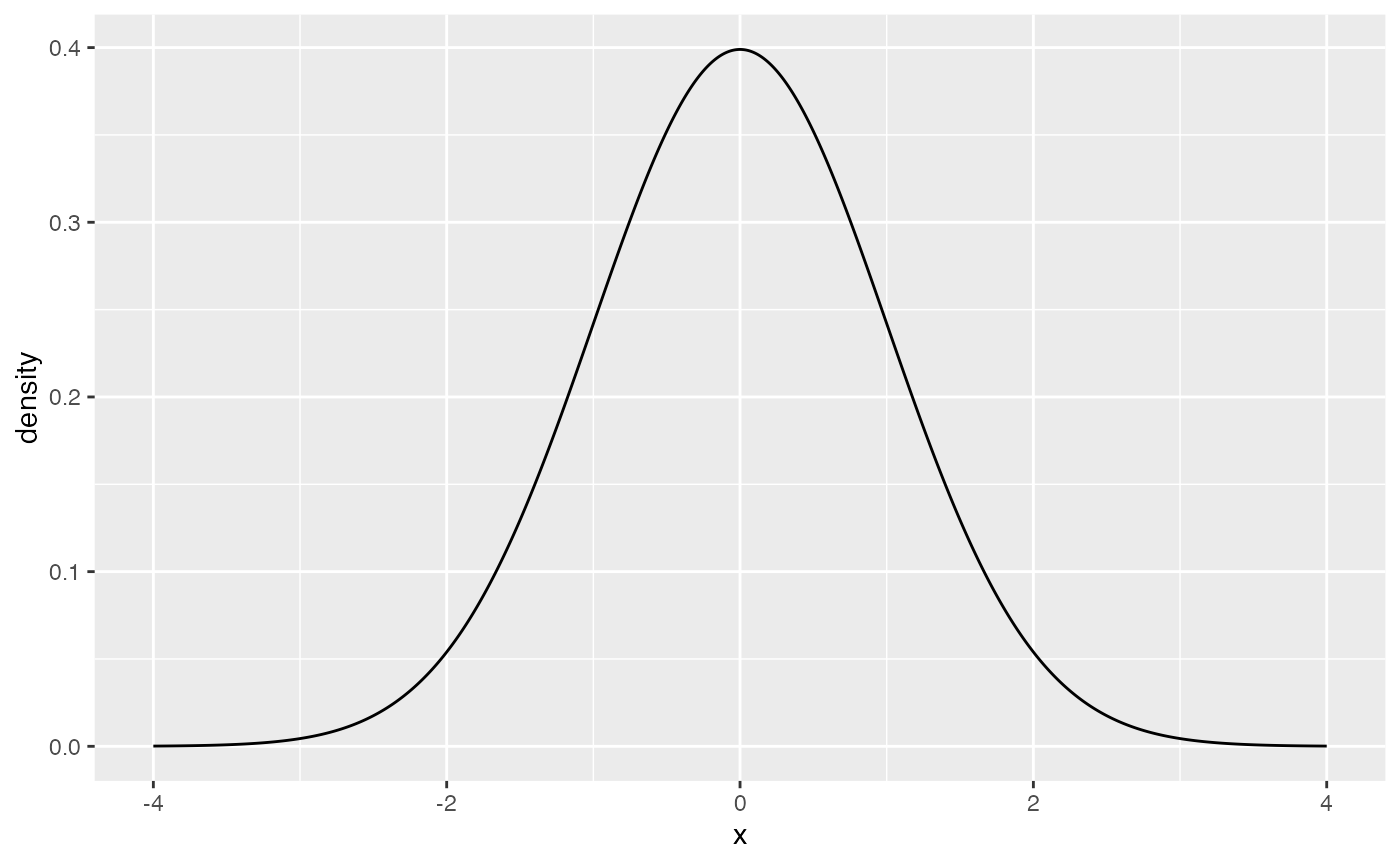

To generate a plot of the full distribution in R, you could calculate additional intervening values on the x-axis, and use a line plot.

some_data <- data.frame(density = dnorm(seq(-4,4,.001), mean = 0, sd = 1),

x = seq(-4,4,.001))

ggplot(some_data, aes(x=x, y=density)) +

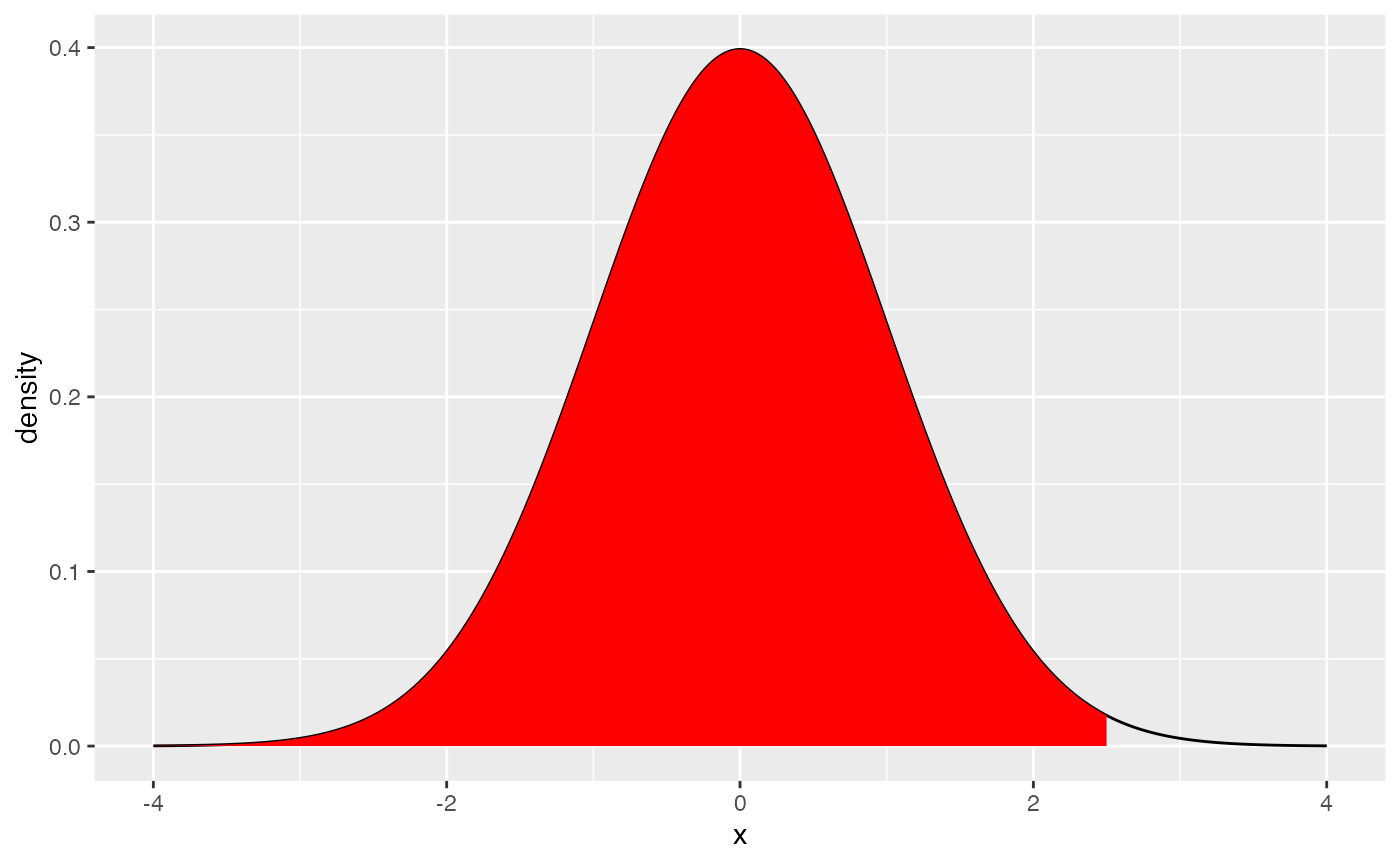

geom_line() Note, that this probability density function could be consulted to ask a question like, what is the probability of getting a value larger than 2.5? The answer is given by the area under the curve (in red).

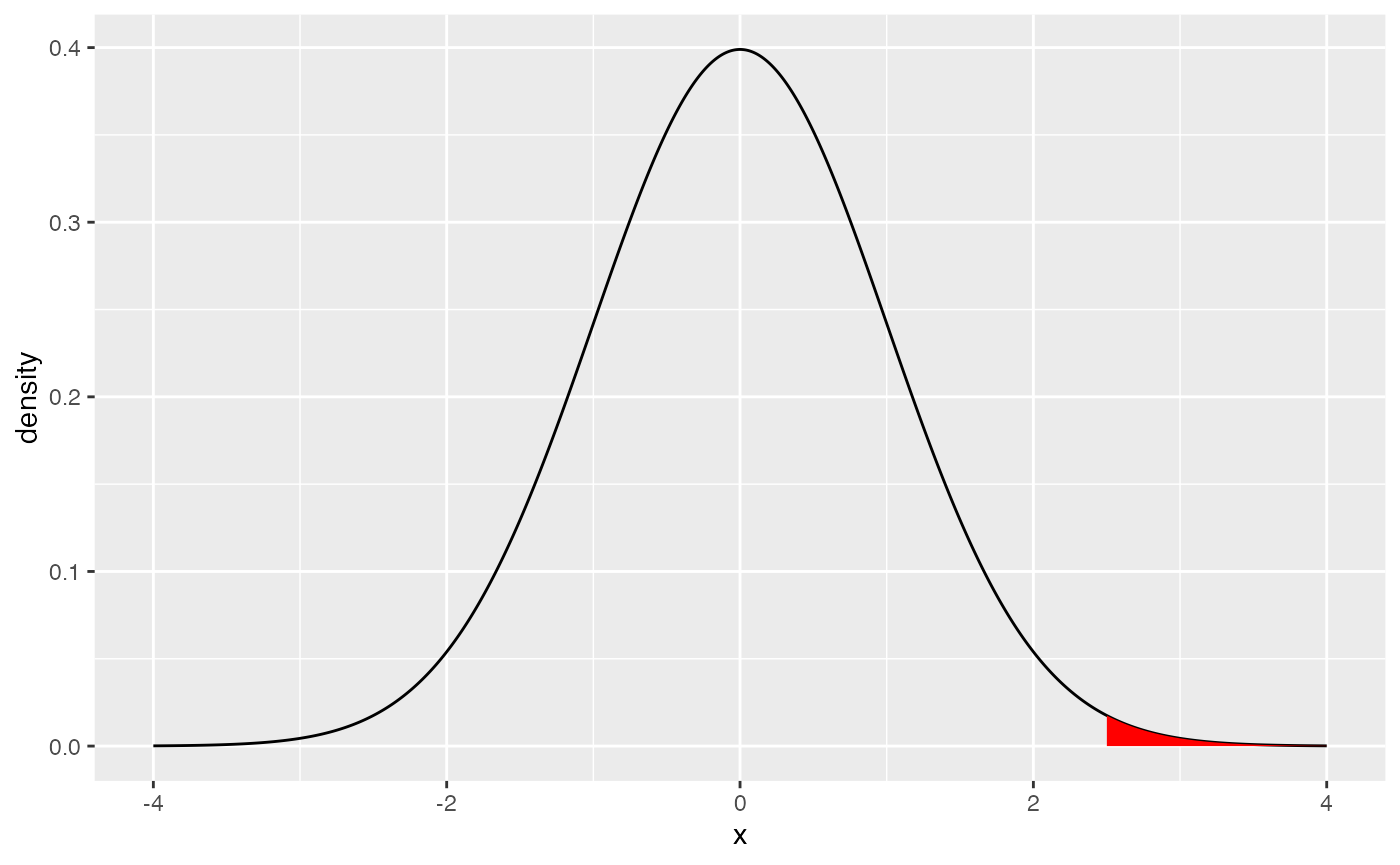

Note, that this probability density function could be consulted to ask a question like, what is the probability of getting a value larger than 2.5? The answer is given by the area under the curve (in red).

library(dplyr)

#>

#> Attaching package: 'dplyr'

#> The following objects are masked from 'package:stats':

#>

#> filter, lag

#> The following objects are masked from 'package:base':

#>

#> intersect, setdiff, setequal, union

some_data <- data.frame(density = dnorm(seq(-4,4,.001), mean = 0, sd = 1),

x = seq(-4,4,.001))

region_data <- some_data %>%

filter(x > 2.5)

ggplot(some_data, aes(x=x, y=density)) +

geom_line()+

geom_ribbon(data = region_data,

fill = "red",

aes(ymin=0,ymax=density)) ### pnorm

### pnorm

pnorm(q, mean = 0, sd = 1, lower.tail = TRUE, log.p = FALSE) takes a given value that the distribution could produce as an input called q for quantile. The function then returns the proportional area under the curve up to that value.

For example, what is the area under the curve starting from the left (e.g., negative infinity) all the way to 2.5?

pnorm(1.64, mean=0, sd = 1)

#> [1] 0.9494974This is the “complement” of our question. That is 99.37903% of all values drawn from the distribution are expected to be less than 2.5. We have just determined the probability of getting a number smaller than 2.5, otherwise known as the lower tail.

some_data <- data.frame(density = dnorm(seq(-4,4,.001), mean = 0, sd = 1),

x = seq(-4,4,.001))

region_data <- some_data %>%

filter(x < 2.5)

ggplot(some_data, aes(x=x, y=density)) +

geom_line()+

geom_ribbon(data = region_data,

fill = "red",

aes(ymin=0,ymax=density)) By default,

By default, pnorm calculates the lower tail, or the area under the curve from the q value point to the left side of the plot.

To calculate the the probability of getting a number larger than a particular value you can take the complement, or set lower.tail=FALSE

qnorm

qnorm(p, mean = 0, sd = 1, lower.tail = TRUE, log.p = FALSE) is similar to pnorm, but it takes a probability as an input, and returns a specific point value (or quantile) on the x-axis. More formally, you specify a proportional area under the curve starting from the left, and the function tells which number that area corresponds to.

For the remaining we assume mean=0 and sd =1.

What number on the x-axis is the location where 25% of the values are smaller than that value?

qnorm(.25, mean= 0, sd =1)

#> [1] -0.6744898What number on the x-axis is the location where 50% of the values are smaller than that value?

qnorm(.5, mean= 0, sd =1)

#> [1] 0What is the number where 95% of the values are larger than that number?

Conceptual: Sampling Distributions

When we collect data we assume they come from some “distribution”, which “causes” some numbers to occur more than others. The data we collect is a “sample” or portion of the “distribution”.

We know that when we sample from distributions chance can play a role. Specifically, by chance alone one sample of observations could look different from another sample, even if they came from the same distribution. In other words, we recognize that the process of sampling from a distribution involves variability or uncertainty.

We can use sampling distributions as a tool to help us understand and predict how our sampling process will behave. This way we can have information about how variable or uncertain our samples are.

Confusing jargon

Throughout this course you will come across terms like, “sampling distributions”, “the sampling distribution of the sample mean”, “the sampling distribution of any sample statistic”, “the standard deviation of the sampling distribution of the sample mean is the standard error of the mean”. Although all of these sentence are hard to parse and in my opinion very jargony, they all represent very important ideas that need to be distinguished and well understood. We are going to work on these things in this lab.

The sample mean

For all of the remaining examples we will use a normal distribution with mean = 0 and sd =1.

We already know what the sample mean is and how to calculate it in R. Here is an example of calculating a sample mean, where the number of observations (n) in the sample is 10.

Multiple sample means

We can repeat the above process as many times as we like, each time creating a sample of 10 observations and computing the mean.

Here is an example of creating 5 sample means from 5 sets of 10 observations.

mean(rnorm(10, mean=0, sd =1))

#> [1] 0.6237751

mean(rnorm(10, mean=0, sd =1))

#> [1] 0.4509605

mean(rnorm(10, mean=0, sd =1))

#> [1] 0.5084535

mean(rnorm(10, mean=0, sd =1))

#> [1] -0.09471295

mean(rnorm(10, mean=0, sd =1))

#> [1] 0.02905442Notice each of the sample means is different, this is because of the variability introduced by randomly choosing values from the same normal distribution.

The mean of the distribution that the samples come from is 0, what do we expect the mean of the samples to be? In general, we expect 0, but we can see that not all of the sample means are exactly 0.

How much variability can we expect for our sample mean? In other words, if we are going to obtain a sample of 10 numbers from this distribution, what kinds of sample means could we get?.

The sampling distribution of the sample means

The answer to the question what kinds of sample means could we get? is “the sampling distribution of the sample means”. In other words, if you do the work to actually find out and create a bunch of samples, and then find their means, then you have a bunch of sample means, and all of these numbers form a distribution. This distribution is effectively showing you all of the different ways that random chance can produce particular sample means.

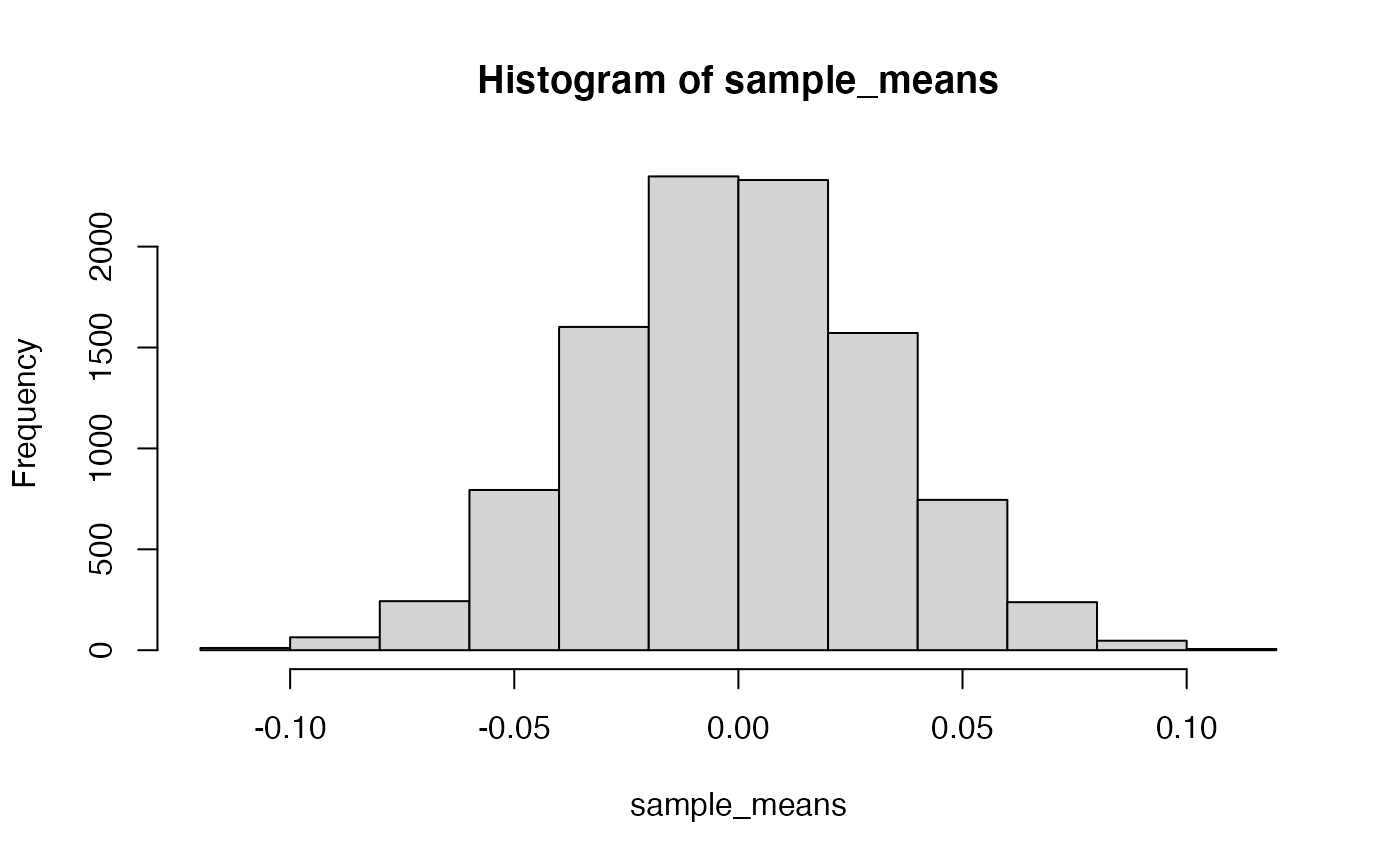

Let’s make a distribution of sample means. We will create 10,000 samples, each with 10 observations, and compute the mean for each. We will save and look at all of the means in a histogram.

sd(sample_means)

#> [1] 0.03211106The above is a histogram representing the means for 10,000 samples. We can refer to this as a sampling distribution of the sample means. It is how sample means (in this one particular situation) are generally distributed.

If you wanted to know what to expect from a single sample mean (if you knew you were taking values from this normal distribution), then you could look at this sampling distribution.

Sample means close to 0 happen the most. So, most of the time, when you take a sample from this distribution, the mean of that sample will be 0. It is rare for a sample mean to be larger than .5. It is very very rare for a sample mean to be larger than 1.

The standard error the mean

We have not discussed the concept or definition of the standard error the mean in the lecture portion of this class. However, we have seen that the mean for a sample taken from a distribution has expected variability, specifically the fact that there is a distribution of different sample means shows that there is variability.

What descriptive statistic have we already discussed that provides measures of variability? One option is the standard deviation. For example, we could do the following to measure the variability associated with a distribution of sample means.

- Generate a distribution of sample means

- Calculate the standard deviation of the sample means

The standard deviation of the sample means would give us an idea of how much variability we expect from our sample mean. We could do this quickly in R like this:

The value we calculated is a standardized unit, and it describes the amount of error we expect in general from a sample mean. Specifically, if the true population mean is 0, then when we obtain samples, we expect the sample means will have some error, they should on average be 0, but plus or minus the standard deviation we calculated.

We do not necessarily have to generate a distribution of sample means to calculate the standard error. If we know the population standard deviation (\(\sigma\)), then we can use this formula for the standard error of the mean (SEM) is:

\(\text{SEM} = \frac{\sigma}{\sqrt{N}}\)

where \(\sigma\) is the population standard deviation, and \(N\) is the sample size.

We can also compare the SEM from the formula to the one we obtained by simulation, and we find they are similar.

Lab 5 Generalization Assignment

Instructions

In general, labs will present a discussion of problems and issues with example code like above, and then students will be tasked with completing generalization assignments, showing that they can work with the concepts and tools independently.

Your assignment instructions are the following:

- Work inside the R project “StatsLab1” you have been using

- Create a new R Markdown document called “Lab5.Rmd”

- Use Lab5.Rmd to show your work attempting to solve the following generalization problems. Commit your work regularly so that it appears on your Github repository.

- For each problem, make a note about how much of the problem you believe you can solve independently without help. For example, if you needed to watch the help video and are unable to solve the problem on your own without copying the answers, then your note would be 0. If you are confident you can complete the problem from scratch completely on your own, your note would be 100. It is OK to have all 0s or 100s anything in between.

- Submit your github repository link for Lab 5 on blackboard.

- There are five problems to solve

Problems

Trust but verify. We trust that the

rnorm()will generate random deviates in accordance with the definition of the normal distribution. For example, we learned in this lab, that a normal distribution with mean = 0, and sd =1 , should only produce values larger than 2.5 with a specific small probability, that is P(x>2.5) = 0.006209665. Verify this is approximately the case by randomly sampling 1 million numbers from this distribution, and calculate what proportion of numbers are larger than 2.5. (1 point)If performance on a standardized test was known to follow a normal distribution with mean 100 and standard deviation 10, and 10,000 people took the test, how many people would be expected to achieve a score higher than 3 standard deviations from the mean? (1 point)

You randomly sample 25 numbers from a normal distribution with mean = 10 and standard deviation = 20. You obtain a sample mean of 12. You want to know the probability that you could have received a sample mean of 12 or larger.

Create a sampling distribution of the mean for this scenario with at least 10,000 sample means (1 point). Then, calculate the proportion of sample means that are 12 or larger (1 point).

- You randomly sample 100 numbers from a normal distribution with mean = 10 and standard deviation = 20. You obtain a sample mean of 12. You want to know the probability that you could have received a sample mean of 12 or larger.

Create a sampling distribution of the mean for this scenario with at least 10,000 sample means. Then, calculate the proportion of sample means that are 12 or larger. Is the proportion different from question 3, why? (1 point).

- You randomly sample 25 numbers from a normal distribution with mean = 10 and standard deviation = 20. You obtain a sample standard deviation of 15. You want to know the probability that you could have received a sample standard deviation of 15 or less.

Create a sampling distribution of standard deviations for this scenario with at least 10,000 sample standard deviations. Then, calculate the proportion of sample standard deviations that are 15 or less. (1 point)